|

Signal Peptide, Signal Sequences

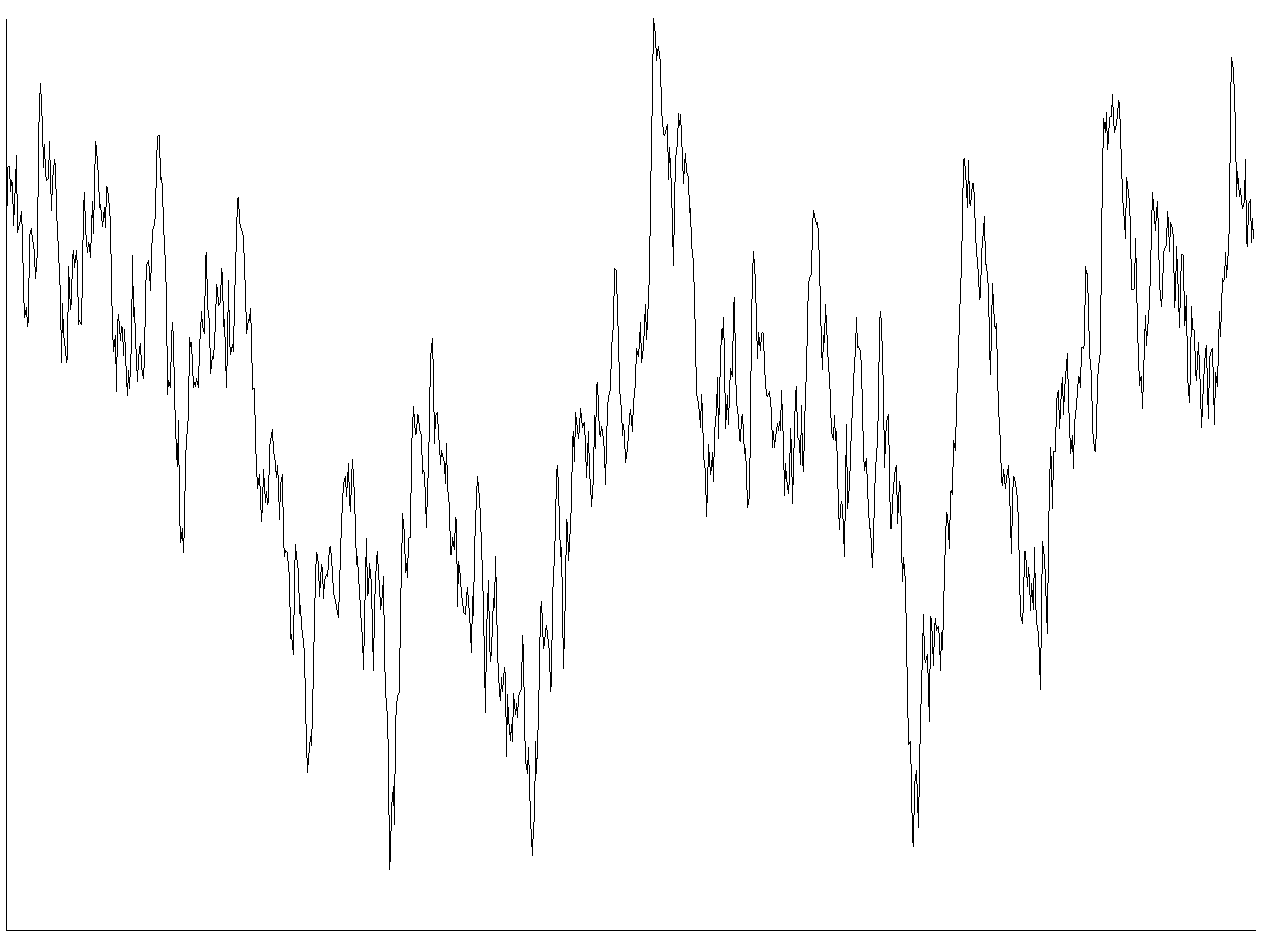

A signal is both the process and the result of transmission of data over some media accomplished by embedding some variation. Signals are important in multiple subject fields including signal processing, information theory and biology. In signal processing, a signal is a function that conveys information about a phenomenon. Any quantity that can vary over space or time can be used as a signal to share messages between observers. The '' IEEE Transactions on Signal Processing'' includes audio, video, speech, image, sonar, and radar as examples of signals. A signal may also be defined as observable change in a quantity over space or time (a time series), even if it does not carry information. In nature, signals can be actions done by an organism to alert other organisms, ranging from the release of plant chemicals to warn nearby plants of a predator, to sounds or motions made by animals to alert other animals of food. Signaling occurs in all organisms even at cellular levels, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

William Powell Frith The Signal 1858

William is a masculine given name of Germanic languages, Germanic origin. It became popular in England after the Norman Conquest, Norman conquest in 1066,All Things William"Meaning & Origin of the Name"/ref> and remained so throughout the Middle Ages and into the modern era. It is sometimes abbreviated "Wm." Shortened familiar versions in English include Will (given name), Will or Wil, Wills, Willy, Willie, Bill (given name), Bill, Billie (given name), Billie, and Billy (name), Billy. A common Irish people, Irish form is Liam. Scottish people, Scottish diminutives include Wull, Willie or Wullie (as in Oor Wullie). Female forms include Willa, Willemina, Wilma (given name), Wilma and Wilhelmina (given name), Wilhelmina. Etymology William is related to the German language, German given name ''Wilhelm''. Both ultimately descend from Proto-Germanic ''*Wiljahelmaz'', with a direct cognate also in the Old Norse name ''Vilhjalmr'' and a West Germanic borrowing into Medieval Latin ''Wil ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Evolutionary Biology

Evolutionary biology is the subfield of biology that studies the evolutionary processes such as natural selection, common descent, and speciation that produced the diversity of life on Earth. In the 1930s, the discipline of evolutionary biology emerged through what Julian Huxley called the Modern synthesis (20th century), modern synthesis of understanding, from previously unrelated fields of biological research, such as genetics and ecology, systematics, and paleontology. The investigational range of current research has widened to encompass the genetic architecture of adaptation, molecular evolution, and the different forces that contribute to evolution, such as sexual selection, genetic drift, and biogeography. The newer field of evolutionary developmental biology ("evo-devo") investigates how embryogenesis is controlled, thus yielding a wider synthesis that integrates developmental biology with the fields of study covered by the earlier evolutionary synthesis. Subfields ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Estimation Theory

Estimation theory is a branch of statistics that deals with estimating the values of Statistical parameter, parameters based on measured empirical data that has a random component. The parameters describe an underlying physical setting in such a way that their value affects the distribution of the measured data. An ''estimator'' attempts to approximate the unknown parameters using the measurements. In estimation theory, two approaches are generally considered: * The probabilistic approach (described in this article) assumes that the measured data is random with probability distribution dependent on the parameters of interest * The set estimation, set-membership approach assumes that the measured data vector belongs to a set which depends on the parameter vector. Examples For example, it is desired to estimate the proportion of a population of voters who will vote for a particular candidate. That proportion is the parameter sought; the estimate is based on a small random sa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Signal Recovery

Detection theory or signal detection theory is a means to measure the ability to differentiate between information-bearing patterns (called stimulus in living organisms, signal in machines) and random patterns that distract from the information (called noise, consisting of background stimuli and random activity of the detection machine and of the nervous system of the operator). In the field of electronics, signal recovery is the separation of such patterns from a disguising background. According to the theory, there are a number of determiners of how a detecting system will detect a signal, and where its threshold levels will be. The theory can explain how changing the threshold will affect the ability to discern, often exposing how adapted the system is to the task, purpose or goal at which it is aimed. When the detecting system is a human being, characteristics such as experience, expectations, physiological state (e.g. fatigue) and other factors can affect the threshold app ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Signal Integrity

Signal integrity or SI is a set of measures of the quality of an electrical signal. In digital electronics, a stream of binary values is represented by a voltage (or current) waveform. However, digital signals are fundamentally analog signal, analog in nature, and all signals are subject to effects such as electrical noise, noise, distortion, and loss. Over short distances and at low bit rates, a simple conductor can transmit this with sufficient fidelity. At high bit rates and over longer distances or through various mediums, various effects can degrade the electrical signal to the point where errors occur and the system or device fails. Signal integrity engineering is the task of analyzing and mitigating these effects. It is an important activity at all levels of electronics packaging and assembly, from internal connections of an integrated circuit (IC), A survey of the field of electronic design automation. Portions of IC section of this article were derived (with permission) fro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Noise (electronics)

In electronics, noise is an unwanted disturbance in an electrical signal. Noise generated by electronic devices varies greatly as it is produced by several different effects. In particular, noise is inherent in physics and central to thermodynamics. Any conductor with electrical resistance will generate thermal noise inherently. The final elimination of thermal noise in electronics can only be achieved cryogenically, and even then quantum noise would remain inherent. Electronic noise is a common component of noise in signal processing. In communication systems, noise is an error or undesired random disturbance of a useful information signal in a communication channel. The noise is a summation of unwanted or disturbing energy from natural and sometimes man-made sources. Noise is, however, typically distinguished from interference, for example in the signal-to-noise ratio (SNR), signal-to-interference ratio (SIR) and signal-to-noise plus interference ratio (SNIR) measu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Theory

Information theory is the mathematical study of the quantification (science), quantification, Data storage, storage, and telecommunications, communication of information. The field was established and formalized by Claude Shannon in the 1940s, though early contributions were made in the 1920s through the works of Harry Nyquist and Ralph Hartley. It is at the intersection of electronic engineering, mathematics, statistics, computer science, Neuroscience, neurobiology, physics, and electrical engineering. A key measure in information theory is information entropy, entropy. Entropy quantifies the amount of uncertainty involved in the value of a random variable or the outcome of a random process. For example, identifying the outcome of a Fair coin, fair coin flip (which has two equally likely outcomes) provides less information (lower entropy, less uncertainty) than identifying the outcome from a roll of a dice, die (which has six equally likely outcomes). Some other important measu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Content

In information theory, the information content, self-information, surprisal, or Shannon information is a basic quantity derived from the probability of a particular event occurring from a random variable. It can be thought of as an alternative way of expressing probability, much like odds or log-odds, but which has particular mathematical advantages in the setting of information theory. The Shannon information can be interpreted as quantifying the level of "surprise" of a particular outcome. As it is such a basic quantity, it also appears in several other settings, such as the length of a message needed to transmit the event given an optimal source coding of the random variable. The Shannon information is closely related to ''entropy'', which is the expected value of the self-information of a random variable, quantifying how surprising the random variable is "on average". This is the average amount of self-information an observer would expect to gain about a random variable whe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Entropy (information Theory)

In information theory, the entropy of a random variable quantifies the average level of uncertainty or information associated with the variable's potential states or possible outcomes. This measures the expected amount of information needed to describe the state of the variable, considering the distribution of probabilities across all potential states. Given a discrete random variable X, which may be any member x within the set \mathcal and is distributed according to p\colon \mathcal\to[0, 1], the entropy is \Eta(X) := -\sum_ p(x) \log p(x), where \Sigma denotes the sum over the variable's possible values. The choice of base for \log, the logarithm, varies for different applications. Base 2 gives the unit of bits (or "shannon (unit), shannons"), while base Euler's number, ''e'' gives "natural units" nat (unit), nat, and base 10 gives units of "dits", "bans", or "Hartley (unit), hartleys". An equivalent definition of entropy is the expected value of the self-information of a v ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Loudspeaker

A loudspeaker (commonly referred to as a speaker or, more fully, a speaker system) is a combination of one or more speaker drivers, an enclosure, and electrical connections (possibly including a crossover network). The speaker driver is an electroacoustic transducer that converts an electrical audio signal into a corresponding sound. The driver is a linear motor connected to a diaphragm, which transmits the motor's movement to produce sound by moving air. An audio signal, typically originating from a microphone, recording, or radio broadcast, is electronically amplified to a power level sufficient to drive the motor, reproducing the sound corresponding to the original unamplified signal. This process functions as the inverse of a microphone. In fact, the ''dynamic speaker'' driver—the most common type—shares the same basic configuration as a dynamic microphone, which operates in reverse as a generator. The dynamic speaker was invented in 1925 by Edward W. Kellogg ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Microphone

A microphone, colloquially called a mic (), or mike, is a transducer that converts sound into an electrical signal. Microphones are used in many applications such as telephones, hearing aids, public address systems for concert halls and public events, motion picture production, live and recorded audio engineering, sound recording, two-way radios, megaphones, and radio and television broadcasting. They are also used in computers and other electronic devices, such as mobile phones, for recording sounds, speech recognition, Voice over IP, VoIP, and other purposes, such as Ultrasonic transducer, ultrasonic sensors or knock sensors. Several types of microphone are used today, which employ different methods to convert the air pressure variations of a sound wave to an electrical signal. The most common are the dynamic microphone, which uses a coil of wire suspended in a magnetic field; the condenser microphone, which uses the vibrating Diaphragm (acoustics), diaphragm as a capacitor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |